I was sitting in a room full of traders explaining what we were building when the head of trading interrupted me.

“You have that data on a stock-by-stock basis?” he asked.

“Yes.”

He paused and surveyed the room. Pauses are long when you’re customerless, trying to launch a product, and demoing one of the world’s largest asset service providers. It was as if he was considering a trading decision. Then, to his traders, he spoke again.

“We have to be in this beta program.”

Free data can be the most expensive kind.

When you leave everything behind, where do you start? This was the question I faced eighteen months before sitting in that room full of traders.

I had decided to build a business. I had trading knowledge, I understood data strategy and analytics, and I had learned how to create financial technology. Although this framework was valuable, it could not be used without data and technology. So, where to begin?

With data. And technology. The product of which is analytics.

The analytics stack we created is a unique combination of technology. It is the result of connecting fit-for-purpose components in an alignment that produces more value than the sum of their parts. We started with a minimally viable stack. Emphasis on minimally. Now it has been re-architected into a modern stack1.

But even the greatest technology stack is useless without data. So while we needed data and technology to begin, data is the real genesis of analytics. In financial services, and portfolio management specifically, market and reference data are minimum barriers to entry. What gamblers call table stakes.

That doesn’t mean market and reference data are easy to solve. Legacy providers have increased the barriers to entry with cost-prohibitive pricing. Sourcing market data from the NYSE or NASDAQ on a bootstrapped budget does not work. Equally impossible is obtaining even the most common industry and sector reference data. S&P Global have artfully created a monopoly on classifying businesses like United Airlines as an airline.

Our “where-to-start” question occurred as an upstart U.S. stock exchange sought to disrupt traditional exchanges. IEX was making its pricing information available via an API. For free. This was before IEX Cloud, the IEX data offering, existed.

Free works on a bootstrapped budget. IEX was playing a long term game we were happy to join.

Most product decisions are tradeoffs. And disrupting market data pricing created tradeoffs for IEX. Near-real-time is not exactly the same as real-time, for instance. And United Airlines might be classified as a transportation company rather than an airline.

Even after developing an interface to IEX (which was not free) and accepting the data product tradeoffs, this was merely the entry price to enter the world of financial technology.

We still needed data that went beyond table stakes. Preferably free.

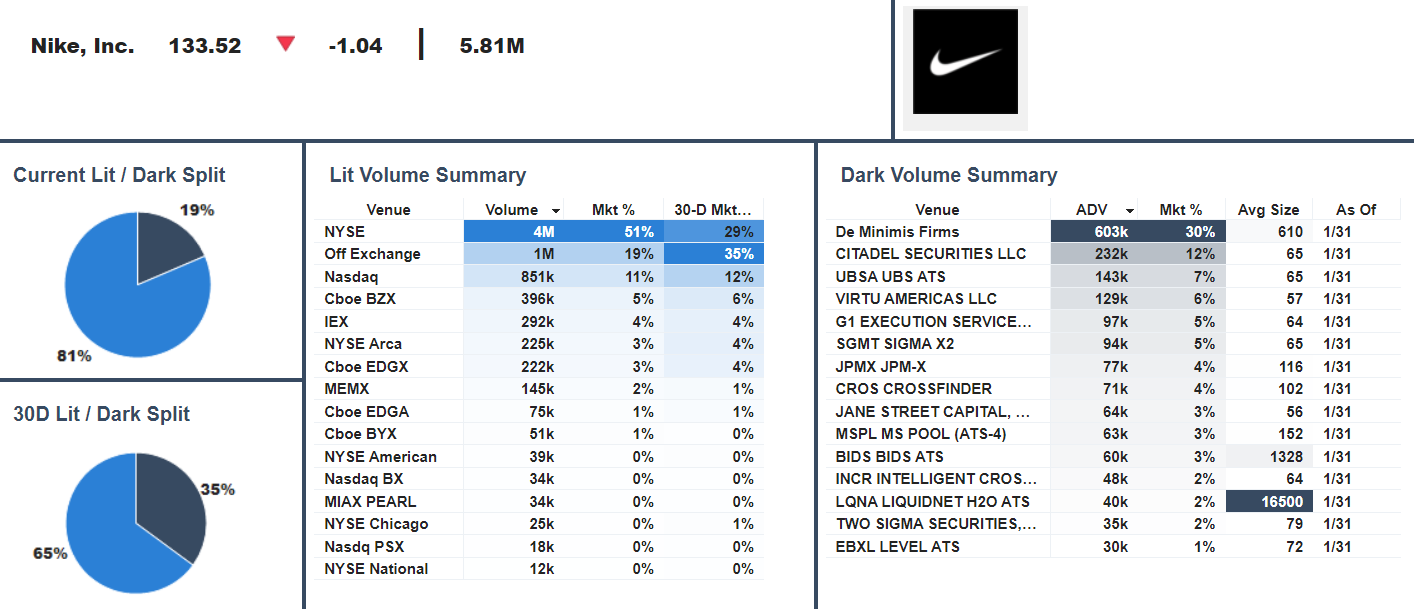

Enter FINRA. The source of data which when captured, transformed, and visualized, prompted that head trader to interrupt our demo. It’s important to pay attention to when head traders interrupt demos.

Like so many of our product beginnings, I do not recall how I learned that FINRA was making off-exchange market activity available. Upon discovering it I realized it had already been available for years. FINRA’s website had an OTC Transparency Section reporting summarized monthly volume activity.

More than 30 U.S. crossing platforms were designated by FINRA as Alternative Trading Systems (ATSs). Each was required to report to the FINRA / NASDAQ Trade Reporting Facilities (TRFs) in real time. But the TRF only records volume and price. Traders know something transacted off-exchange, but they do not know where it transacted. This is why ATSs are commonly referred to as dark pools.

The industry is generally, if slowly, moving towards transparency. At some point FINRA began to report not only monthly summaries, but weekly details. The weekly reports attributed ATS activity by symbol.

This prompted a question: is there signal in the FINRA data?

There has always been a concentration of ATS providers in Boston. It’s likely the number 2 geographic location for ATSs, outside of NYC. As a former Boston equities trader I’d always followed the local ATS firms. They were all competing to capture the same 30% - 40% of U.S. equities volume that trades off listed stock exchanges.

I was working at State Street when the bank acquired Pulse Trading, primarily for its BlockCross ATS. The acquisition, integration, and subsequent sale of BlockCross to Instinet could be a book by itself spanning M&A, corporate politics, trading scandals, and the glass box in which the BlockCross team operated2.

FINRA’s monthly summaries of BlockCross and all ATSs are released on a one-month lag. January data, for instance, is not released until March 1st. FINRA’s weekly detailed reports are released on a 2-to-4-week lag depending on how FINRA classifies the company being traded. Two tiers distinguish between more liquid (Tier 1) and less liquid (Tier 2) stocks.

Information value decays. FINRA reports can be perceived as not useful because of the time lag between activity and reporting. Surely the data would be more valuable if it was real time. But I wasn’t convinced the lag removed all information value. In fact, with some data sets, historical information can actually accrue value when augmented with other data or processed in novel ways.

Intelligent analytics can slow, stop, or reverse information value decay.

We began manually downloading FINRA reports a couple of years before Mark Enriquez became a Point Focal angel investor. Mark was the founder of Pulse Trading, the firm that created BlockCross.

Some things really do come full circle.

FINRA data is free, which is one reason we started with it. Usually, you get what you pay for in life, but not all the time. Stock markets illustrate exceptions to this rule every day.

The value of free data can exceed its cost.

FINRA’s reports were manual and messy. The time it took to source and organize the reports nearly doubled the 2–4-week reporting lag. And while the FINRA data was the most granular off-exchange information available, it was still aggregated by week. We began to question whether it had value.

Then we realized FINRA had made the reports programmatically accessible.

We had already made sense of the monthly ATS reports, thanks in part to the Tabb Group. It was Larry Tabb who interrupted a very early demo of what we were building to ask, “How do we partner?”. Ever since that question we have supported Tabb Forum’s Equities Liquidity Matrix.

Converting Tabb Group’s PDF reports into a web application taught us a lot about exchange and ATS data. It also helped teach us how to automate a fintech data operation with our analytics stack. What used to take several analysts many days to produce static reports now takes zero analysts zero days to produce interactive analytics.

TABB Forum Equities Liquidity Matrix (Free TF Subscription Required)

This understanding of off-exchange data and analytics helped shape the first piece of our Focus List, Dark Venue Analytics.

We had enough market structure information to start telling a new story. Our narrow, but deep analytics premise started with free, off-exchange venue data. We coupled that with volume by venue and large trade data from IEX Cloud. Combining traditional and alternative market structure content created a new liquidity perspective. A new market structure story. New analytics.

In real-time, we can see what’s happening on lit exchanges. We can also see what is happening in aggregate off-exchange, via the trade reporting facilities. Now we could also see what happened historically off-exchange across alternative trading systems and brokers.

We had three pieces of the market structure picture. Real-time on-exchange detail, real-time aggregate off-exchange summaries, and historical off-exchange detail. The question was whether these three components could be used to derive meaningful information about real-time, off-exchange detail.

Could the location of where liquidity was most likely trading off-exchange be determined before FINRA reported the answer? We are still writing the story and analyzing the answer, but one thing we can say with confidence is three pieces of market structure data are better than two.

Free FINRA data is valuable partly because it’s messy, incomplete, and difficult to access. These characteristics have obscured the data from financial technology.

At Tabb Group’s Fintech Festival in 2019 we had a prototype of single-stock off-exchange analytics cobbled together by Northeastern interns. Clearpool, a dominant algo-provider with TCA analytics had a booth across from Point Focal.

Vendor halls are a petri dish of competitive intelligence. When we visited the Cleearpool booth they smiled and waved at our off-exchange analytics. They said they were working on building something similar. Upon further inquiry this meant someone at Clearpool had a spreadsheet somewhere populated with some FINRA data.

Each prospect we’ve visited who uses Clearpool expresses that off-exchange data and analytics are coming to Clearpool. In 2022, we haven’t seen it yet. Maybe it will come, maybe it won’t. Lacking FINRA data did not prevent Bank of Montreal (BMO) from acquiring Clearpool for an undisclosed sum in 2020.

It is worth paying attention to what firms choose not to do. Clearpool had decided the cost of integrating FINRA data was not worth the benefits. While we can’t know the calculus of Clearpool’s cost-benefit analysis, the product decision was clear. And if you’re trying to be honest with your business, it’s important to understand why successful firms in your space are ignoring the same data into which you are investing resources.

I had more reason to pause and reflect on our efforts when I explained to the head of an ATS provider what we were doing with the FINRA data. How we were sourcing, organizing, analyzing, and delivering off-exchange analytics. It was an unexpected encounter on the street. My explanation was a response to the “What are you up to?” question. I thought of all the people who might care about off-exchange analytics, the head of an ATS had to be one.

I’ll never forget his response. Smiling, but seriously, and slowly, he asked, “Why would you do that?”

Uh-oh.

I nervous-laughed and gave a short answer. The voice in my head said, “one of us is crazy”. I spent the rest of the night assessing the odds it might be me.

Then Bridgewater dropped their name into our website. They wanted a demo of our off-exchange analytics.

When the largest hedge fund in the world contacts you unsolicited, seeking a demo, you wonder if it’s a signal. With $140B in assets under management, Bridgewater was not our target market. Point Focal aims to help asset managers who do not have Bridgewater resources and capabilities. Further, we are not focused on quantitative hedge funds who want raw data from data-as-a-service (DaaS) providers.

Nevertheless, we were excited to understand Bridgewater’s interest and show them what we were building. Three people from Bridgewater joined our demo. A portfolio manager, an analyst, and, according to his LinkedIn profile, Bridgewater’s “Global Market Data Leader; Data Ranger & Finder”. This was a new persona.

The demo was brief, but thorough. The communication was brief and direct. There was real interest in our off-exchange analytics. In hindsight it may have been more intrigue than interest. Our Estimize post-earnings drift views also warranted demo time and discussion.

Unsurprisingly, Bridgewater intent and ambition was poker-faced and close-to-the-vest. They did reveal they were building similar off-exchange analytics. The data ranger contemplated whether it would be prudent for Bridgewater to subscribe to our analytics rather than continue to build something similar with internal resources. The PM and the analyst weren’t sure.

We set up trial access for the PM, the analyst, and the data ranger. There was a bit of back and forth and a second demo. At the time, our platform was too immature to track user activity. We suspect they never logged in.

In our last exchange, the data ranger said two things.

1) They were probably going to build it themselves.

2) Come back when you have 605 and 606 data.

Our favorite objection to a Point Focal subscription is “we’ll build it ourselves”. This has become such a common objection, we preempt it in demos. We explain to head traders that when we leave the trading floor, their IT department will tell them they can build it themselves.

We go on to explain that they’re right, they could build it. But if they were actually going to build it, it would already be built. And the fact that it will take thousands of man hours to build means it will not be prioritized because it’s not their IT mandate, not their business value proposition, and not aligned with corporate incentives that allocate resources3.

This we’ll-build-it-ourselves notion is a fallacy for the vast majority of asset managers. Even large asset managers. But when Bridgewater says they’re going to build it themselves, they’re probably going to build it themselves.

And they should. They are the exception to the rule.

But this demo was not noise. It was signal.

The head trader who said, “We have to be in this beta program” put his team in our beta program. They had deep domain knowledge of on and off-exchange market structure. But they also had something we would learn is rare in capital markets. They understood how to apply market structure analytics to trading strategies.

This is why the light bulb went off when they saw our analytics. It wasn’t merely information reporting or theoretical scenario analyses they envisioned. It was the practical application of integrating analytics into their trading workflow.

When we get past the “We’ll build it ourselves” objection, we get to the real objection, “We don’t understand how to get your analytics into our workflow”. This is the challenge all portfolio analytics firms face when selling into asset managers and service providers.

The financial technology stack is difficult to break into. Application real estate is sacred. Legacy technology players are entrenched with sticky services protected by moats of high barriers to entry. To insert your product into this stack requires real innovation. Not the kind generally trumpeted from Chief Innovation Officers. Innovation that demonstrates growth through improved performance and risk management.

On this trading desk we had found a team who understood our analytics, how to integrate them into their workflows, and how to use them to grow their business. This was the product market fit we were chasing.

This firm was engaged. We met every other week to exchange feedback. They provided input that informed product views. We created workflows that made it easy for them to use our product alongside their existing fintech stack. When we exited beta they became our first paying customer.

The combination of their domain knowledge and our analytics produced knowledge that improved their trading strategy. And if you can improve a global asset service provider’s trading strategy, you have a business. It doesn’t matter how much the underlying data costs, how messy it is, or how challenging it is to organize.

All that matters is whether the analytics tell a story that improves performance and risk management4.

We’ve learned a lot from when we first manually downloaded FINRA data to when we used it to win our first customer. Here are the lessons:

Data cost is not always positively correlated with data value.

To integrate analytics into trading workflows,

talklisten to traders5.Invest time and resources into engaged prospects. Like so much in life, input drives output. I explain the exception to this rule in the Signals pod “Crossing the Chasm”.

The timing of head trader demo interruptions matter.

If people are asking if you’re crazy, you may be on to something.

In the world of data and analytics, little insights can make big differences. They lead to business knowledge, which produce better decisions, which improves performance and risk management, which wins new business.

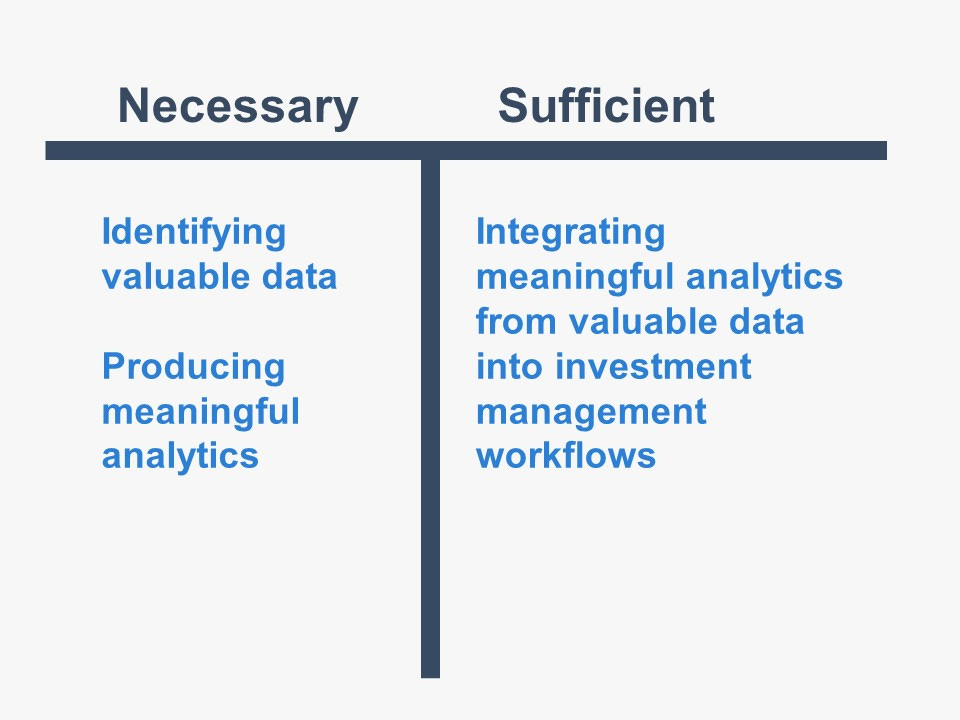

The analytic value chain inevitably leads to growth. If the chain breaks down before growth occurs, the insights are insufficient. And even with meaningful insight, analytics must be integrated into investment management workflows to link the chain and capture growth. Isolated analytics cannot break into the chain. Stranded outside of workflows, they end up in the analytic grave of wasted resources.

Identifying valuable data is necessary, but not sufficient. Producing meaningful analytics from valuable data is necessary, but not sufficient. Integrating meaningful analytics from valuable data into investment management workflows is sufficient. This is where the analytic value chain is connected end-to-end and the magic happens.

It is how little insights lead to business growth.

Afterword

Shortly after Bridgewater told us to come back when we had 605 and 606 data, Tim Quast from Modern IR requested a demo. Modern IR provides market structure insight for corporate investor relations teams. (Investor relations is the IR.) Tim thought our analytics might help his IR reporting.

We met late on a Friday, late in 2021. Tim’s breadth of market structure knowledge was overwhelming and offset by a sharp sense of humor with an untraceable accent6. We spent eighty minutes sharing product stories, market structure humor, and platform demos.

There was something that made sense between Modern IR and Point Focal, some sort of complementary translation that begged exploration. If our firms were a Ven diagram, the overlapping section was truly novel, even if at the time we didn’t quite understand the overlap.

Tim and I agreed there was something here worth exploring. Then 2022 arrived, we both got busy with our businesses, and we fell out of touch. I followed Tim’s Modern IR progress and his Market Structure EDGE business launch from a distance.

In EDGE Tim had created a market structure sentiment model of demand and supply. Underneath the model was 605 and 606 data. Best execution and short volume information. Our business overlap was becoming clear.

Tim and I remained focused on our respective products in ‘22. But I kept thinking about our overlap. Tim’s content and domain knowledge and our client workflows and institutional analytics.

In October I invited Tim to Boston to join me on a Boston Securities Traders Association (BSTA) panel. I knew he would be a wildly entertaining and educational panelist. A perfect misfit. But selfishly, I wanted to show Tim the institutional community in which we are embedded. I wanted to understand Modern IR and Market Structure EDGE. I wanted to understand our overlap and bring it to market together.

Tim graciously said yes and arrived in Boston in cowboy boots. The panel was a blast. Turnout was great and there was a feeling of post-COVID normalcy. Tim was hysterically informative in the greatest way.

I made it clear to Tim I wanted to help bring EDGE to institutions. He smilingly pondered the thought while absorbing the portfolio management and trading community before us. Afterwards, he returned to Colorado and we both got crazy again developing our products.

Then a month later I got an email from Tim.

Subject line: “EDGE for Institutions”.

Message: “Let’s figure this out.”

Figuring this out has led to an exciting collaboration between ModernIR, Market Structure EDGE, and Point Focal. Together we’re enriching market structure sentiment analytics. And we’re designing a more complete investor relations dashboard combining market structure with earnings, ESG, and news information.

Like our BSTA panel, our goal is to educate and help those who can benefit from a truer understanding of how market structure explains market behavior7.

I will publish a Focus Signal piece on our tech stack evolution in the coming months.

Writing this out makes me want to write the book. Working title, “Crossed”.

Some of our favorite customers are those who have learned from time that their IT department is not actually going to build it.

The fact that we won business from our FINRA analytics neither proves I’m not crazy nor proves the head of the ATS who asked, “Why would you do that?” is crazy.

Likewise, to integrate into portfolio management workflows, talk listen to portfolio managers. For research analyst workflows, talk listen to research analysts. This seems obvious when stated. However, it’s easy to fall into the trap of build-the-technology-and-traders,-PMs,-and-analysts-will-come. This is not the case. If you want access to their workflows, you must understand their workflows. And to understand their workflows, you must talk listen to them.

Turns out it’s Oregonian.

Subscribe to Focus Signal to read the Market Structure EDGE story when it’s published!